本文主要记录了安装hadoop ,hive ,zookeeper ,hbase ,kylin 的整个过程,其中各软件的版本如下:

hadoop-2.7.3.tar.gz

apache-hive-2.1.1-bin.tar.gz

hbase-1.2.4-bin.tar.gz

zookeeper-3.4.9.tar.gz

apache-hive-2.1.1-bin.tar.gz

直接在各官网下载较慢,可使用中国互联网络信息中心开源镜 寻找各软件

操作系统使用的为Ubuntu 14.04.4 LTS,java version为1.8.0_111

MySQL使用操作系统自带的tasksel安装,版本号为5.5.53-0ubuntu0.14.04.1

为避免权限问题,以下全部使用root用户执行

各软件包均下载至/usr/local/src,为简便起见,均解压至/opt,例如/opt/hadoop-2.7.3

本文提到的服务器hostname为master.xyduan.com,也通过域名解析到master.xyduan.com,ssh生成的秘钥对已将公钥加入自己的authorized_keys,以下截屏涉及到的显示为root@master由PS1值默认设置决定

设置JAVA相关环境变量

下载jdk8,地址 ,此处以下载jdk-8u111-linux-x64.tar.gz为例

将下载的jdk-8u111-linux-x64.tar.gz解压至/usr/local/jdk1.8.0_111

在/etc/profile中增加export JAVA_HOME=/usr/local /jdk1.8.0_111

export JRE_HOME=${JAVA_HOME} /jre

export CLASSPATH=.:${JAVA_HOME} /lib:${JRE_HOME} /lib

export PATH=${JAVA_HOME} /bin:$PATH

执行source /etc/profile

此时执行java -version命令若出现类似以下页面,则java环境安装正常root@master:/opt# java -version

java version "1.8.0_111"

Java(TM) SE Runtime Environment (build 1.8.0_111-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.111-b14, mixed mode)

安装HDFS 增加环境变量

/etc/profile中增加以下内容,并source /etc/profile

export HADOOP_HOME=/opt/hadoop-2.7.3

export PATH=$PATH :$HADOOP_HOME /bin

export PATH=$PATH :$HADOOP_HOME /sbin

export HADOOP_MAPARED_HOME=${HADOOP_HOME}

export HADOOP_COMMON_HOME=${HADOOP_HOME}

export HADOOP_HDFS_HOME=${HADOOP_HOME}

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_CMD=$HADOOP_HOME /bin/hadoop

export HADOOP_STREAMING=$HADOOP_HOME /share/hadoop/tools/lib/hadoop-streaming-2.7.1.jar

export YARN_HOME=${HADOOP_HOME}

export HADOOP_CONF_DIR=${HADOOP_HOME} /etc/hadoop

export HDFS_CONF_DIR=${HADOOP_HOME} /etc/hadoop

export YARN_CONF_DIR=${HADOOP_HOME} /etc/hadoop

export PATH=$PATH :$HADOOP_HOME /bin

export PATH=$PATH :$HADOOP_HOME /sbin

export JAVA_LIBRARY_PATH=$HADOOP_HOME /lib/native

/opt/hadoop-2.7.3/etc/hadoop/hadoop-env.sh中增加export JAVA_HOME=/usr/local/jdk1.8.0_111,并source

修改配置文件 core-site.xml 路径/opt/hadoop-2.7.3/etc/hadoop/core-site.xml<configuration>中增加以下配置<property >

<name > hadoop.tmp.dir</name >

<value > file:/opt/data/hadoop/tmp</value >

<description > Abase for other temporary directories.</description >

</property >

<property >

<name > fs.defaultFS</name >

<value > hdfs://localhost:9000</value >

</property >

hdfs-site.xml 路径 /opt/hadoop-2.7.3/etc/hadoop/hdfs-site.xml<configuration>中增加以下配置<property >

<name > dfs.replication</name >

<value > 1</value >

</property >

<property >

<name > dfs.namenode.name.dir</name >

<value > file:/opt/data/hadoop/name</value >

</property >

<property >

<name > dfs.datanode.data.dir</name >

<value > file:/opt/data/hadoop/data</value >

</property >

yarn-site.xml 路径 /opt/hadoop-2.7.3/etc/hadoop/yarn-site.xml<configuration>中增加以下配置<property >

<name > yarn.nodemanager.aux-services</name >

<value > mapreduce_shuffle</value >

</property >

mapred-site.xml 路径 /opt/hadoop-2.7.3/etc/hadoop/mapred-site.xml<configuration>中增加以下配置<property >

<name > mapreduce.framework.name</name >

<value > yarn</value >

</property >

<property >

<name > mapreduce.jobhistory.address</name >

<value > master.xyduan.com:10020</value >

</property >

<property >

<name > mapreduce.jobhistory.webapp.address</name >

<value > master.xyduan.com:19888</value >

</property >

启动HDFS

执行命令hdfs namenode -format格式化namenode

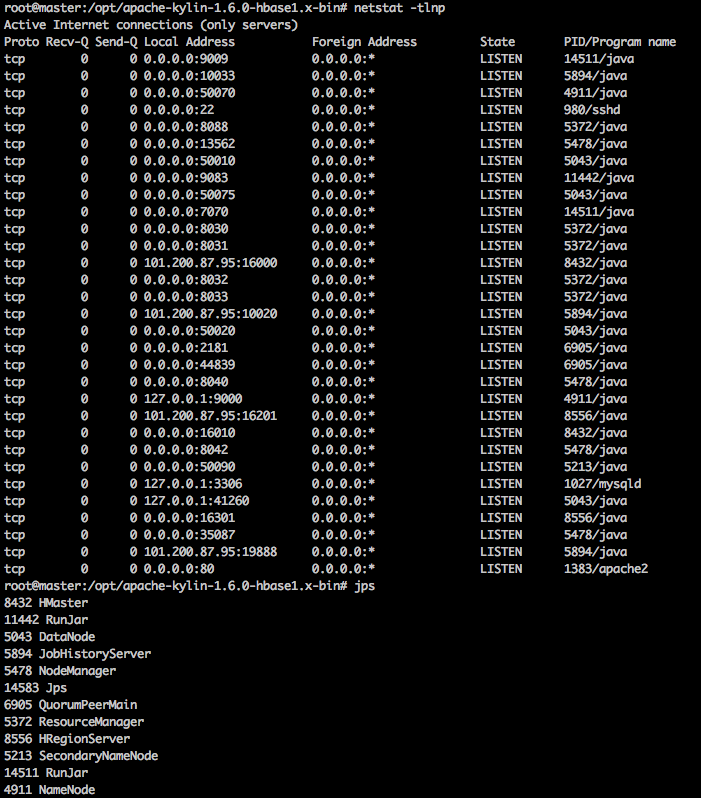

执行start-all.sh启动,再执行mr-jobhistory-daemon.sh start historyserver,正常结果使用jps命令,会出现以下内容(pid不固定)

5043 DataNode

5478 NodeManager

5372 ResourceManager

5756 Jps

5213 SecondaryNameNode

4911 NameNode

能正常访问http://master.xyduan.com:50070及http://master.xyduan.com:8088

执行测试mapreduced程序,hadoop jar /opt/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar pi 5 100,观察http://master.xyduan.com:8088能否正常显示

安装ZooKeeper 修改环境变量 在/etc/profile中增加以下内容,并执行source /etc/profileexport ZOOKEEPER=/opt/zookeeper-3.4.9

export PATH=$PATH:$ZOOKEEPER/bin

修改配置文件 将/opt/zookeeper-3.4.9/conf/zoo.cfg编辑为以下内容tickTime=2000

initLimit=10

syncLimit=5

dataDir=/opt/data/zookeeper

clientPort=2181

启动zookeeper zkServer.sh start即可启动,此时jps命令会增加QuorumPeerMain,通过zkCli.sh可进入,并执行ls /验证是否正常启动

安装HBase 修改环境变量 在/etc/profile中增加以下内容,并执行source /etc/profileexport ZOOKEEPER=/opt/zookeeper-3.4.9

export PATH=$PATH:$ZOOKEEPER/bin

在/opt/hbase-1.2.4/conf/hbase-env.sh中增加export JAVA_HOME=/usr/local/jdk1.8.0_111并source

修改配置文件 路径 /opt/hbase-1.2.4/conf/hbase-site.xml,在<configuration>中增加以下配置<property>

<name>hbase.rootdir</name>

<value>hdfs://localhost:9000/hbase</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/opt/data/zookeeper</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

确保/opt/hbase-1.2.4/conf/regionservers内容为localhost

启动HBase

执行start-hbase.sh,正常启动后jps会增加HMaster和HRegionServer

hbase shell进入后,分别执行以下命令,以验证HBase是否正常启动,参见http://hbase.apache.org/book.html#quickstart

hbase(main):001:0> create 'test', 'cf'

0 row(s) in 0.4170 seconds

=> Hbase::Table - test

hbase(main):002:0> list 'test'

TABLE

test

1 row(s) in 0.0180 seconds

=> ["test"]

hbase(main):003:0> put 'test', 'row1', 'cf:a', 'value1'

0 row(s) in 0.0850 seconds

hbase(main):004:0> put 'test', 'row2', 'cf:b', 'value2'

0 row(s) in 0.0110 seconds

hbase(main):005:0> put 'test', 'row3', 'cf:c', 'value3'

0 row(s) in 0.0100 seconds

hbase(main):006:0> scan 'test'

ROW COLUMN+CELL

row1 column=cf:a, timestamp=1484897590018, value=value1

row2 column=cf:b, timestamp=1484897595294, value=value2

row3 column=cf:c, timestamp=1484897599617, value=value3

3 row(s) in 0.4040 seconds

安装Hive 新建MySQL数据库及用户 用于存储Hive元数据mysql> create database hive;

mysql> grant all privileges on hive.* to hive@'%' identified by 'hive';

此处使用的数据库及用户,密码,均设为hive,hive的核心配置文件会使用到此处的配置

初始化数据库表结构 选择对应的hive版本号mysql hive -uhive -p < /opt/apache-hive-2.1.1-bin/scripts/metastore/upgrade/mysql/hive-schema-2.1.0.mysql.sql

修改环境变量 在/etc/profile中增加以下内容,并执行source /etc/profileexport HIVE_HOME=/opt/apache-hive-2.1.1-bin

export HIVE_CONF_DIR=$HIVE_HOME/conf

export PATH=$HIVE_HOME/bin:$HIVE_HOME/conf:$HIVE_HOME/hcatalog/bin:$HIVE_HOME/hcatalog/sbin:$PATH

修改配置文件 从模板中复制配置文件为hive-site.xmlcp /opt/apache-hive-2.1.1-bin/conf/hive-default.xml.template /opt/apache-hive-2.1.1-bin/conf/hive-site.xml

修改以下配置段<property>

<name>hive.exec.local.scratchdir</name>

<value>/opt/hive/iotmp/root</value>

<!--<value>${system:java.io.tmpdir}/${system:user.name}</value>-->

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/opt/hive/iotmp/${hive.session.id}_resources</value>

<!--<value>${system:java.io.tmpdir}/${hive.session.id}_resources</value>-->

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>hive.metastore.ds.connection.url.hook</name>

<value/>

<description>Name of the hook to use for retrieving the JDO connection URL. If empty, the value in javax.jdo.option.ConnectionURL is used</description>

</property>

<property>

<name>javax.jdo.option.Multithreaded</name>

<value>true</value>

<description>Set this to true if multiple threads access metastore through JDO concurrently.</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost/hive?createDatabaseIfNotExist=true</value>

<!--<value>jdbc:derby:;databaseName=metastore_db;create=true</value>-->

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

<!--<value>true</value>-->

<description>

Enforce metastore schema version consistency.

True: Verify that version information stored in is compatible with one from Hive jars. Also disable automatic

schema migration attempt. Users are required to manually migrate schema after Hive upgrade which ensures

proper metastore schema migration. (Default)

False: Warn if the version information stored in metastore doesn't match with one from in Hive jars.

</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<!--<value>org.apache.derby.jdbc.EmbeddedDriver</value>-->

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>hive.querylog.location</name>

<value>/opt/hive/iotmp/root</value>

<!--<value>${system:java.io.tmpdir}/${system:user.name}</value>-->

<description>Location of Hive run time structured log file</description>

</property>

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>/opt/hive/iotmp/root/operation_logs</value>

<!--<value>${system:java.io.tmpdir}/${system:user.name}/operation_logs</value>-->

<description>Top level directory where operation logs are stored if logging functionality is enabled</description>

</property>

启动hive 拷贝mysql-connector-java-5.1.7-bin.jar至/opt/apache-hive-2.1.1-bin/lib中,链接 hive --service metastore &jps会增加RunJar

安装kylin 修改环境变量 在/etc/profile中增加以下内容,并执行source /etc/profileexport KYLIN_HOME=/opt/apache-kylin-1.6.0-hbase1.x-bin

export PATH=$PATH:$KYLIN_HOME/bin

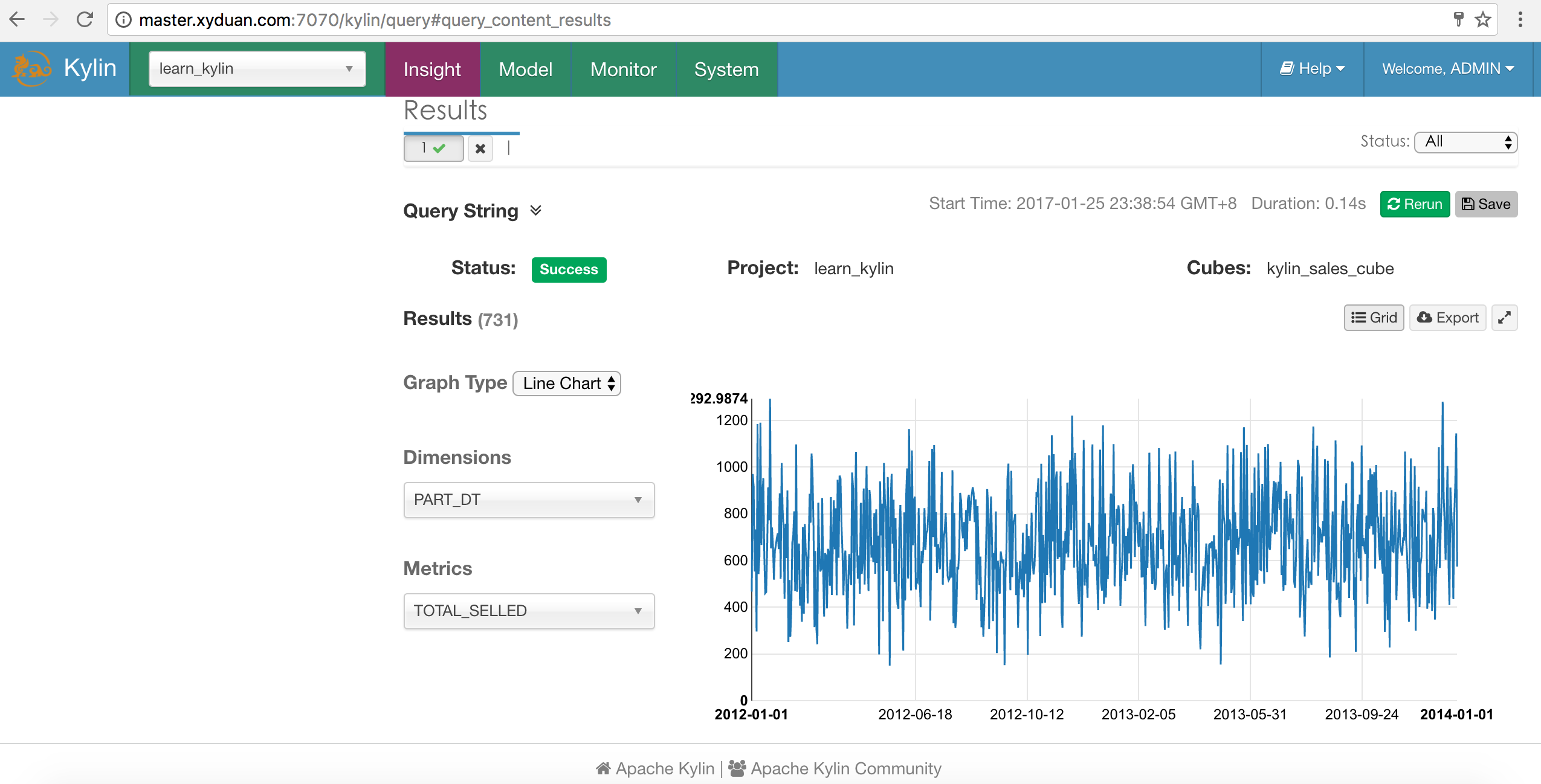

启动kylin kylin.sh start

启动自带例子 sample.sh,web页面重新载入